With the promise of quicker diagnosis, individualized treatment regimens, and improved hospital operations, artificial intelligence (AI) has become a disruptive force in contemporary healthcare. AI techniques are being used to improve patient care in fields including radiology and mental health assistance. However, although we rejoice in these developments, a serious issue that frequently goes overlooked is AI bias in healthcare. It might not be as evident as a malfunctioning device or a medication that has gone bad, but the repercussions could be equally harmful. AI bias subtly permeates choices and systems, influencing results in ways that can be discriminatory against people depending on their socioeconomic background, gender, or race. And to be honest, that puts the idea of treating everyone fairly in jeopardy.

What Is AI Bias in Healthcare?

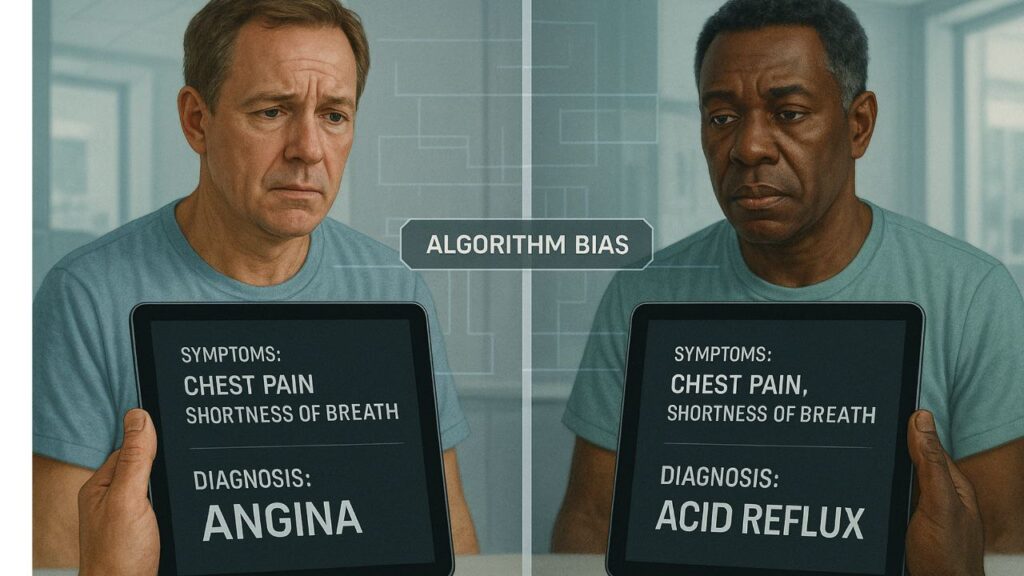

The systematic and unjust discrimination that occurs when AI technologies make conclusions based on skewed or inadequate data is known as AI bias in healthcare. Large datasets, such as test results, medical records, or demographic patterns, are used to teach these technologies. However, the AI only magnifies whatever biases, mistakes, or inequities that are present in such datasets. For instance, an AI system may not identify symptoms that manifest differently in women or people of color if it is mostly trained on data from white male patients. The outcome? incorrect diagnosis, postponed medical interventions, or even potentially fatal mistakes.

Bias does not necessarily manifest as overt sexism or racism. It may take the form of algorithmic presumptions that ignore minority populations, missing correlations, or unequal data representation. However, when AI choices are accepted without human monitoring, these nuances have the potential to escalate into significant healthcare inequities.

Real-World Examples of AI Bias in Medicine

Let’s move past the theoretical and discuss real-world examples of AI prejudice. One well-known example is a 2019 study that was published in Science and discovered that, even when two patients were similarly ill, US hospitals’ algorithm for determining which patients need more care was less likely to send Black patients than White ones. Why? Healthcare expenses used as a stand-in for health requirements in the model. The AI concluded Black patients were healthier since they frequently had lesser medical expenses because of access difficulties. Deeply biased suggestions were the eventual outcome.

Apps for dermatology that were trained on pictures of people with light skin are another example. Patients with darker skin tones are frequently overlooked by these instruments, which are intended to detect skin cancer or other disorders. For instance, a melanoma can go unnoticed since the AI has never been taught how it appears on black or dark skin.

Bias based on gender also appears. Due in large part to previous data that misrepresented the presentation of heart disease in female patients, several heart attack prediction algorithms have been found to perform worse for women. Given that heart disease is one of the main causes of mortality for women, this is especially risky.

How Bias Enters AI Systems

What is the source of this prejudice, then? In actuality, AI models can only be as good as the data they are fed, and this data originates from an already inequitably structured healthcare system. The AI will inherit any biases present in past medical records, which many do. For instance, data may indicate undertreatment rather than true need if women’s pain claims were previously frequently disregarded, which might cause AI to make incorrect conclusions in the future.

In addition to faulty data, there is the problem of algorithm design. Many developers might not have a thorough awareness of medical diversity, particularly those employed by software firms rather than hospitals. This results in broad presumptions that fail to take into consideration differences in age, gender, and ethnicity. Furthermore, datasets from a particular institution or area are frequently used to train models, which may not accurately reflect larger populations. To put it briefly, prejudice infiltrates both the development process and the data pipeline.

Who Gets Affected and Why It Matters

Is it really a huge concern if AI bias just impacts a group of individuals, you ask? The answer is definitely yes. Disenfranchised groups, including women, racial and ethnic minorities, the elderly, those living in rural areas, and people with impairments, are disproportionately impacted by AI prejudice. In conventional hospital environments, these are already the most vulnerable groups. We run the risk of hardcoding inequity into medicine’s future when AI tools, which are meant to level the playing field, instead make it wider.

Furthermore, there is a lack of faith in healthcare systems, particularly in areas where prejudice has historically occurred. That confidence may be further damaged if AI systems produce biased findings. People may feel excluded from the promise of tech-enabled medicine, avoid care, or mistrust their diagnosis. This is a human rights problem, not merely a technological one. The healthcare industry must continue to be one of equity rather than exclusion.

Solutions to Reduce or Prevent Bias

The good news? AI doesn’t have to be biased. In fact, with the right strategies, it can help reduce existing disparities. Here are a few practical solutions:

- Diverse datasets: AI systems must be trained on data that reflects the full spectrum of patients—across race, gender, geography, and health conditions.

- Bias audits: Independent reviews and testing can uncover hidden biases in algorithms before they are deployed at scale.

- Transparent model design: Developers should make their models explainable, so clinicians can understand how decisions are made.

- Inclusive development teams: A more diverse workforce in AI development can spot problems that a homogenous group might overlook.

- Regulations and standards: Governments and medical institutions must enforce ethical guidelines and require bias checks for AI tools.

When done right, AI can become a force for good—spotting gaps that human practitioners miss and offering unbiased, evidence-based support.

The Role of Healthcare Professionals and AI Developers

Healthcare professionals aren’t off the hook just because AI tools are in play. In fact, doctors, nurses, and hospital administrators must stay actively involved in how AI is selected, tested, and used. They can advocate for patient populations, flag suspicious patterns, and demand improvements from tech vendors.

On the other side, AI developers must collaborate with medical experts and treat healthcare as more than just another dataset. Building tools that work for everyone means understanding that health isn’t a one-size-fits-all issue. Medical ethics, patient diversity, and cultural sensitivity should be embedded into every stage of the AI development process.

Frequently Asked Questions (FAQs)

Q1: What is AI bias in healthcare?

AI bias in healthcare refers to systematic errors in algorithmic decision-making that unfairly disadvantage certain groups—especially marginalized communities. This happens when AI systems are trained on biased data or built without considering diversity in patients. It can lead to misdiagnosis, unequal treatment, and poorer health outcomes.

Q2: Can AI bias in healthcare be completely eliminated?

While it may be impossible to remove 100% of bias, it can be significantly reduced through thoughtful design, regular auditing, and inclusive data collection. The key is to recognize that bias exists and build systems that actively guard against it rather than ignore it.

Q3: Who is most at risk from biased AI in medicine?

Marginalized groups—such as racial and ethnic minorities, women, and rural patients—are most at risk. These populations are often underrepresented in training data and may receive less accurate or delayed care as a result.

Q4: How do developers test AI tools for bias?

Developers use various methods like fairness metrics, demographic subgroup analysis, and third-party audits. They can also test the AI’s performance across different populations to see if there are disparities in results.

Q5: What role do doctors play in preventing AI bias?

Doctors can identify biased outcomes in clinical settings, report them, and push for tools that better serve all patient groups. Their feedback is crucial in refining AI tools and making them safer and more equitable.

Q6: Is regulation keeping up with AI in healthcare?

Regulation is catching up, but slowly. Agencies like the FDA and WHO are beginning to address AI ethics, but stronger, clearer policies are needed to ensure all AI in hospitals is safe, fair, and transparent.

Final Thoughts: Can AI Heal Without Harming?

Artificial intelligence holds enormous potential to revolutionize healthcare. But like any powerful tool, it must be used responsibly. Bias in AI isn’t just a glitch—it’s a mirror reflecting our societal flaws. If left unchecked, it could hardwire discrimination into medical care. But if addressed with honesty, transparency, and inclusive innovation, AI can also become a catalyst for fairness.

So here’s the real question: Can we build healthcare AI that heals without harming? That answer lies not in the technology itself, but in the hands of those who design and deploy it.

Hi, I’m Santu Kanwasi, a passionate blogger with over 2 years of experience in content writing and blogging. I create original, informative, and engaging articles on a wide range of topics including news, trending updates, and more. Writing is not just my profession—it’s my passion. I personally research and write every article to ensure authenticity and value for my readers.

Whether you’re looking for fresh perspectives or reliable updates, my blog is your go-to source!